For this reason, we couldn’t use the technical methods only used in orchestrators such as Kubernetes, Rancher or OpenShift, or the proprietary services of public clouds, such as those offered by AWS and GCE. The system that supplies the certificates should run on any Docker platform, regardless of the orchestrator used, or the cloud.When we face the challenge of secure management of security, we place the following requirements on ourselves: Herein lies the challenge in finding solutions for the management of secrets in containers.

If the image does not contain sensitive information, can the secrets be consulted without performing a prior authentication procedure? If I have to perform that procedure, what mechanism should I use to do so? Is it possible to do it without secrets? However, this fact is, in and of itself, a problem.

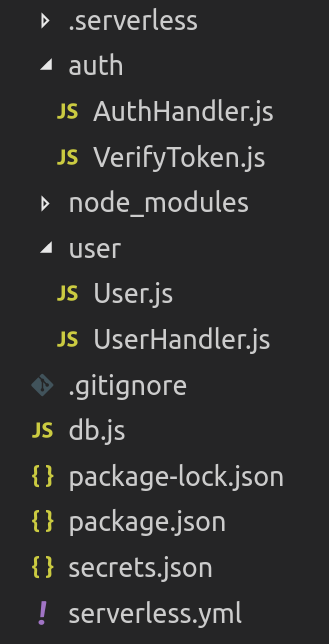

#Npm serverless secrets software

The solution consists of requesting secrets in runtime by means of some piece of software included in the image, or sidekick images solves the former problem, since the image is immutable and does not contain sensitive information. or we have to create different images for each environment, ignoring the immutable nature that the images should have. either we have to share those credentials in multiple environments, and we will be incurring in serious problems of confidentiality and segmentation of privileges. That said, it’s clear that if the image is created with credentials saved directly in its definition, we have two possibilities: That is, the images are created once, and that same image should run in any environment. The image of the container should be immutable. If we analyze the problem of generating images with stored secrets, we observe the following issues: Solutions from the legacy world for managing secrets are generally based on having them stored in the system permanently, or having software that takes care of asking third parties for those secrets. These solutions, if they do work for this type of nearly perpetual systems, are difficult to adapt to the world of containers. For example, the so-called “ Privileged Accounts Managers” whose brief is to take care of renovating secrets in systems with long lifecycles, and who have clients that allow to consult those secrets in runtime. Of course, for traditional systems, there are secure methods that allow the renovation of secrets, and their secure use by systems/software. Additionally, in the case of detection of a possible intrusion, renovating the compromised secrets turns into an arduous task, due to the simple fact that there may not be procedures for doing so, since it is not a task that is commonly performed. It is also not secure: a breach in a system with stored credentials allows those credentials to be used for an unlimited time. This is, unfortunately, a quite common case, because of the operational effort required to renovate secrets. There are probably countless systems in the IT ecosystem with service users that are configured with the same credentials, without an expiration date, that were generated when the system was implemented. This means that in many cases, it is decided to introduce the secrets in the system once, and they remain in a filesystem for years (at times, for many years). When containers are not used, software and its configuration are deployed in systems with a long lifecycle, which could even last years. It should be confidential and it is indispensable - for example, so that users and systems can connect to one another in a secure manner. Prior to this article, this analysis of serverless architectures or FaaS (Function as a Service) was prepared, to introduce this technology together with its value in business developments.Ī secret is a piece of information that is required for authentication, authorization, encryption and other tasks.

This article is the second part about serverless, where we will cover the integration of one of the most interesting products implementing this technology ( Fission) in OpenShift, RedHat’s PaaS platform.

0 kommentar(er)

0 kommentar(er)